Aliens are real: they live in our data centers

And that's why "AI Teammates" / "AI Coworkers" are a flawed concept

Here’s a thought experiment: imagine if the last few years of AI progress unfolded entirely within a secretive company known for its dramatic keynotes. Then, at a highly anticipated event, they unveil technology akin to ChatGPT (the latest version with o1 & Voice Mode) by staging a rocket landing from Mars, complete with little green, antenna-ed beings who step out and start answering any question in any language—including Klingon, of course.

Now, answer this: if this spectacle was the first broad public interaction with LLMs, instead of a friendly human-like chat interface, would we still collectively map LLMs onto the scale of "human/superhuman" intelligence, rushing to overhaul our B2B SaaS product roadmaps with AI features? Or would we regard them as an alien intelligence, something to be carefully studied and marveled at for a while before we even think of integrating them into all aspects of our personal and work lives?

Obviously, this timeline is pure sci-fi1, but it highlights a key point: LLMs are smart, yet their intelligence spikes in different areas from ours. This divergence has led to both fascination and confusion. The prevailing mistake, especially in Silicon Valley VC-land, is to frame AI as augmenting or replacing human output—an easy but misguided assumption that, at best, leads us on an off-ramp from the path towards optimal human-AI interface design, and at worst, creates unattainable expectations that will inevitably lead to bubble-bursting unhappiness.

Allow me to explain.

The Alien Intelligence Among Us

LLMs (and Foundation Models in general) are already superhuman in several areas:

Memory: Can recall thousands of details from immense datasets, without blinking. Sometimes they confidently get things wrong or invent their own facts, but hey, aliens like to have fun too.

Pattern Matching Across Oceans of Data: Can absorb data from the internet (including that novel you abandoned in high school), distilling patterns from everything they’ve read.

Language Translation at Scale: Imagine being instantly fluent in every language ever and being able instantly to create essays, poems, code, websites—you name it.

Handling Long Contexts: Can take in a sprawling, 200-page technical document or a 2 hour long YouTube video and maintain coherence across the entire source, while human attention context window sizes have only been decreasing (with a precipitous drop since the day TikTok was invented).

That said, there are some very human things they’re terrible at:

Ingenuity and Creativity: Sure, they can remix patterns and generate convincing text, but they’re not going to come up with the next Lord of the Rings or solve world hunger. They’re only as creative as their training data allows.

Common Sense Reasoning: Ask an LLM whether it's safe to use a toaster in the bathtub, and it might not respond with the level of panic that such a question deserves. LLM reasoning capabilities have been getting better over time, but it’s unclear if LLMs will truly reason in the way humans do (this is the blue dress/gold dress question for nerds).

Just like an alien species might possess strengths we can't fathom but lack traits we consider essential, LLMs excel in areas that are beyond human capacity, yet struggle with the very things that make our intelligence unique. Their strengths and weaknesses suggest a kind of intelligence that operates on a different wavelength entirely—something to be studied, leveraged, and complemented, but not mistaken for a mere extension of our own thinking.

Why the Concept of AI Teammates Is Flawed

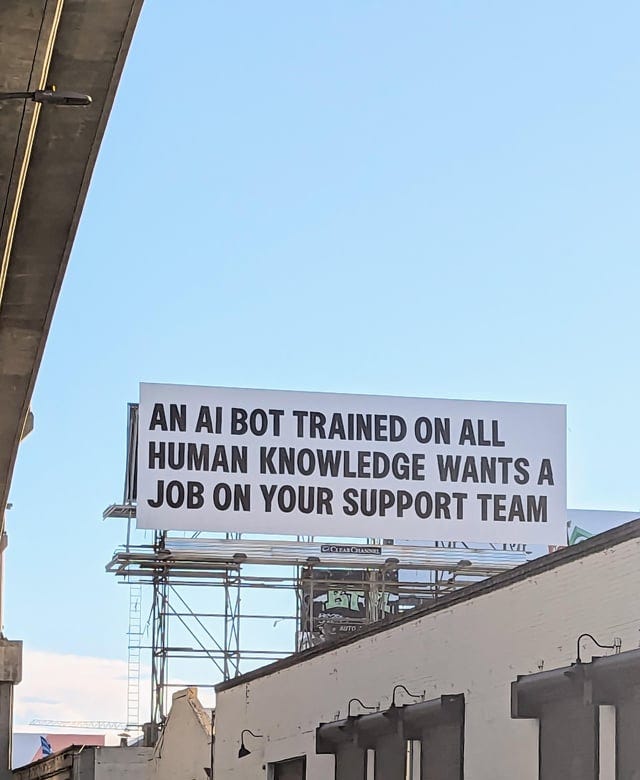

Silicon Valley loves a good narrative, and the current favorite is the "AI teammate" concept. You’ve probably heard it in many forms: AI is going to be your co-worker, your assistant, maybe even your work best friend. If you haven’t, just drive up on 101 and look at any billboard:

But this analogy, while convenient in the short term, will be prove to be a hindrance in the long run.

Anthropomorphization: The Shortcut to Uncanny Valley

We humans have a habit of attributing human-like qualities to machines—sometimes to hilarious effect, sometimes to tragic disappointment. Remember Microsoft's Clippy? It was supposed to act like an intuitive assistant, but instead it quickly became the most infamous office troll, popping up with suggestions that no one needed.

More recent examples include chatbots that attempt to mimic conversational flow. Sure, they can string together sentences and may even tell you a joke, but when tasked with solving complex, context-heavy problems, they can veer off into strange territory, leaving users baffled.

History of Mismatched Expectations

We’ve been down this road before with almost every major advancement in computing interfaces. The late '90s and early 2000s saw AI assistants and speech recognition systems that were heavily marketed as "game-changing," but they weren’t nearly as capable as advertised.

Voice assistants and chatbots in the 2010s, for example, promised conversational fluency, but users quickly discovered that asking them to "play music" or "set a timer" was about the limit of their usefulness — I lived through a whole voice assistant product cycle at Google/Meta and have battle scars to show for it.

Similarly, voice bots introduced to replace human labor in call centers didn’t live up to the expectations, frustrating both customers and businesses alike with their inability to handle even mildly complex requests.

This is the danger of AI anthropomorphization: we expect too much, then we end up hating it. Like any toxic relationship.

The Gen AI Era: New Dog, Old Tricks

Since the arrival of ChatGPT, the way we interact with AI has rapidly evolved. We’ve gone from simple chatbots to "copilots" assisting in specific tasks, and now there’s talk of AI as "coworkers." Each step in this progression has seen AI systems gain more autonomy and context-awareness, but this one-dimensional view of progress misses the broader potential of what AI can really offer.

It's the same old mistake of attributing too much human-like behavior to our machines, with all the same risks. While it may seem that “this time is different” and we now finally have tech that can behave as a human, that’s not the fundamental reality of LLMs and sooner or later the cracks will show up in the seams of every product that pretends otherwise.

Chatbots: Basic but Helpful

Chatbots like ChatGPT, Gemini, Perplexity and Claude, were the first widespread AI tools, answering a wide spectrum of questions and capturing the zeitgeist. They mimicked conversation but quickly hit limits when faced with complex tasks. Useful, but ultimately constrained by their lack of deep context.

Copilots: Smart Assistants

Next came "copilots," like GitHub Copilot and Google's Smart Compose. These systems suggest relevant actions based on the task at hand—anticipating needs, completing code, or refining writing. This shift came with a key improvement: context-awareness. These AI systems have access to more information about the tasks you're performing, so they can offer smarter, more relevant suggestions—but the human operator still holds the reins.

Coworkers: Independent Agents

Recently, AI "coworkers" are being developed to take on more independent tasks, for example in DevOps, where they handle alerts and basic infrastructure problems autonomously. The idea is that AI coworkers can take some of the load off human workers, acting as reliable partners.

This transition from passive assistant to proactive coworker represents a significant increase in autonomy. These AI agents are expected to not only understand the context of tasks but to take action without waiting for human approval. However, this shift assumes that more autonomy and context awareness automatically lead to more useful and powerful AI systems. Reality, as always, isn’t that simple.

One-Dimensional AI Progress Limits Potential

It's tempting to view AI progress as a straight line from chatbots to autonomous coworkers—more context, more autonomy, more intelligence—but this oversimplifies AI’s role:

More Autonomy Isn't Always Better: AI doesn’t need to fully take over tasks to be useful. Copilots that make suggestions often outperform autonomous systems because they amplify human abilities rather than replace them. In many cases, human judgment is critical.

Not One-Size-Fits-All: AI roles—chatbot, copilot, or coworker—aren't hierarchical but situational. Sometimes a simple chatbot suffices, while in other cases, a copilot or coworker excels. The right role depends on the task.

Sets the Wrong Expectations: Projecting AI onto a linear path creates unrealistic user expectations: when AI fails to act like a human "teammate," it breaks the magic of the product experience, leading to user disappointment. It also steers the industry in the wrong direction, wasting time and resources on misguided ambitions.

Missed Opportunities: Focusing solely on more autonomy ignores hybrid forms of collaboration, for example AI could suggest well-thought out multiple pathways, leaving the human to weigh pros and cons, or assist with knowledge retention, or augment human decision-making in ways that go beyond automation.

Human-AI Teaming is Complex: True collaboration requires nuance, flexibility, and trust—things AI isn’t inherently equipped to handle. Viewing AI as a teammate risks oversimplifying the complexities of human-AI interaction.

Embracing Complementarity Over Imitation

So, if AI isn’t going to be your next teammate, how should we think about working with it? The key is to embrace what LLMs actually are: powerful tools that can complement human ability but aren't built to replace it.

Designing Effective Human-AI Collaboration

Effective collaboration with AI requires an approach that leverages its strengths while acknowledging its limitations. Here are a few design principles for better human-AI interaction:

Task Specialization: AI isn’t going to invent the next iPhone, but it can analyze vast amounts of market data to identify trends and opportunities. The key is to match AI with tasks that align with its strengths: data synthesis, pattern recognition, and handling of large-scale text processing. Let humans handle creativity, strategy, and decision-making.

Defining Clear Boundaries: It's crucial to communicate clearly what AI can and cannot do. Setting realistic expectations avoids the uncanny valley of mismatched hopes. For instance, AI can generate content, but it won’t truly understand the emotional nuances behind it. Should you ask it to write your next novel? Probably not. Should you use it to analyze 10 years' worth of customer reviews to find hidden insights? Absolutely.

Symbiotic Workflows: What could a symbiotic workflow between humans and AI look like? One model is humans making high-level decisions while AI handles complex data manipulation in the background. Imagine product managers setting goals, and AI systems autonomously flagging potential issues or opportunities based on real-time data. Could this allow teams to focus on innovation, while AI does the heavy lifting of operations and monitoring? A flipped out model, on the other hand, is where AI suggests the most promising directions to explore, which in turn actuates teams of humans to go and do the work, verify the outputs and report back results to the AI for further directions.

Interactive Learning: Human-AI collaboration could also take inspiration from how we work with other human experts. In much the same way that two specialists might engage in iterative feedback, AI systems could prompt humans with new insights, questions, or summaries, and humans could refine and guide the system's direction. Imagine an AI assistant suggesting five different interpretations of data and asking the human operator which approach seems most relevant—turning the user into a sort of "AI coach."

Collaborative Creativity: Although LLMs aren't creative in the human sense, they can serve as idea generators, sparking new directions for projects. What if we treated AI as a brainstorming oracle, like a partner for Socratic thinking? This approach shifts AI's role from being a "doer" to an "idea generator"—but with lot more context than the current chatbots or copilots.

Questions to Ponder

In the tasks/workflows you are building AI solutions for, where is an AI copilot—providing real-time suggestions and support—more beneficial than a fully autonomous coworker, and vice versa?

How do we design workflows and interfaces that allow humans and AI to operate as partners, not competitors for control?

What new forms of human-AI interaction can we explore that don’t fit into the "more autonomy" trajectory? Could AI serve as a reflective tool for humans to refine their own thinking, but also double up as an agent executing tasks when instructed?

How can we prevent the next wave of AI hype from making the same anthropomorphic mistakes?

What are the implications for AI Safety when we’ve truly embraced the notion that what we have in our hands is an alien intelligence?

Inspiration from Fiction: Human-like vs. Alien-like AI

Technological progress and product innovation is often inspired by science fiction: many innovators grew up with futuristic ideas from movies, shows, and books—like how Star Trek's communicator foreshadowed the flip phone. Myself and folks I’ve worked with to build voice assistant products were all inspired by Jarvis, Samantha, or similar AIs from books/movies, which heavily influenced our product thinking.

However, I’ve come to accept that we need to be careful about which fictional models we choose to be influenced by. Leaning too heavily on human-like AI depictions, like C-3PO or Samantha, can lead to unrealistic expectations. Alien-like portrayals, such as HAL 9000 or TARS, better reflect the reality of today’s AI: smart but fundamentally different from human thinking.

Here’s a list of AI in fiction, categorized by how closely they resemble human-like intelligence versus something more alien:'

There’s a diverse set of mental models to pick from when designing human-AI interfaces (would love to hear about any interesting ones that I missed). The danger lies in viewing AI through too narrow a lens, based on specific fictional depictions that either completely humanize or dehumanize it. When developing products and imagining how AI will interact with us in the real world, we’re better off with a more nuanced understanding—one that blends the right amount of intelligence augmentation with an appreciation for AI's "alien" nature.

Wrapping up

The progression from chatbots to copilots to coworkers may seem linear, but it’s a limiting view. AI isn't destined to become more "human" with each step—it’s a versatile tool with unique strengths that complement human work. Instead of focusing solely on greater autonomy, we should explore diverse ways for humans and AI to collaborate effectively.

While this wave of AI may end up unbundling human labor and bite off big chunks of value creation across industries, let’s not pigeonhole AI into a path that doesn’t suit its nature—or ours.

Or… it could have happened if maybe Elon never left OpenAI, sat on this tech until SpaceX figured out Mars travel, and staged this whole thing just for lols :) Any budding fiction novelists need plot ideas?

This is logical